What is the standard error of the difference in two proportions?

The standard error for the difference in two proportions can take different values and this depends on whether we are finding confidence interval (for the difference in proportions) or whether we are using hypothesis testing (for testing the significance of a difference in the two proportions). The following are three cases for the standard error.

Case 1: The standard error used for the confidence interval of the difference in two proportions is given by:

![]()

where ![]() is the size of Sample 1,

is the size of Sample 1, ![]() is the size of Sample 2,

is the size of Sample 2, ![]() is the sample proportion of Sample 1 and

is the sample proportion of Sample 1 and ![]() is the sample proportion of Sample 2.

is the sample proportion of Sample 2.

Case 2: The standard error used for hypothesis testing of difference in proportions with  is given by:

is given by:

![]()

where ![]() is the pooled sample proportion given by

is the pooled sample proportion given by ![]() where

where ![]() is the number of successes in Sample 1,

is the number of successes in Sample 1, ![]() is the number of successes in Sample 2,

is the number of successes in Sample 2, ![]() is the size of Sample 1 and

is the size of Sample 1 and ![]() is the size of Sample 2.

is the size of Sample 2.

Case 3: The standard error used for hypothesis testing of difference in proportions with  is given by:

is given by:

![]()

where ![]() is the size of Sample 1,

is the size of Sample 1, ![]() is the size of Sample 2,

is the size of Sample 2, ![]() is the sample proportion of Sample 1,

is the sample proportion of Sample 1, ![]() is the sample proportion of Sample 2 and

is the sample proportion of Sample 2 and ![]() .

.

Derivations

In the following we give a step-by-step derivation for the standard error for each case.

Suppose that we have two samples: Sample 1 of size ![]() and Sample 2 of size

and Sample 2 of size ![]() . Let Sample 1 consist of

. Let Sample 1 consist of ![]() elements

elements ![]() . Each element

. Each element ![]() (for

(for ![]() ) could take the value 1 representing a success or the value 0 representing a fail. Let

) could take the value 1 representing a success or the value 0 representing a fail. Let ![]() be the (true and unknown) population proportion for the elements found in Sample 1. That is, an element

be the (true and unknown) population proportion for the elements found in Sample 1. That is, an element ![]() (for

(for ![]() ) of Sample 1 has a probability

) of Sample 1 has a probability ![]() of showing a value of 1 (i.e. of being a success). Similarly, Sample 2 is defined by the elements

of showing a value of 1 (i.e. of being a success). Similarly, Sample 2 is defined by the elements ![]() , and

, and ![]() is the (true and unknown) population proportion for the elements found in Sample 2. Let us also define

is the (true and unknown) population proportion for the elements found in Sample 2. Let us also define ![]() to be the number of successes in Sample 1, i.e.,

to be the number of successes in Sample 1, i.e., ![]() and let

and let ![]() be the number of successes in Sample 1, i.e.,

be the number of successes in Sample 1, i.e., ![]() .

.

We are after:

Case 1: A confidence interval for the difference in the (population) proportions, i.e., ![]() ,

,

Case 2: Testing the hypotheses whether or not the two (population) proportions are equal ![]() , or,

, or,

Case 3: Testing the hypotheses whether or not the two (population) proportions differ by some particular number ![]() .

.

However we do not known the true values of the population parameters ![]() and

and ![]() , and hence we rely on estimates. Let

, and hence we rely on estimates. Let ![]() be the sample proportion of successes for Sample 1. Thus:

be the sample proportion of successes for Sample 1. Thus:

![]()

Let ![]() be the sample proportion of successes for Sample 1. Thus:

be the sample proportion of successes for Sample 1. Thus:

![]()

We are going to assume that the sampled elements are independent (that is, the fact that a sample element is 1 (or 0) has no effect on whether another element is 1 or 0). Note that each element in Sample 1 follows the Bernoulli distribution with parameter ![]() and each element in Sample 2 follows the Bernoulli distribution with parameter

and each element in Sample 2 follows the Bernoulli distribution with parameter ![]() . Let us find the probability distributions of

. Let us find the probability distributions of ![]() and

and ![]() . Let us first start with that for

. Let us first start with that for ![]() and the one for

and the one for ![]() will follow in a similar fashion.

will follow in a similar fashion.

Since each ![]() is Bernoulli distributed with parameter

is Bernoulli distributed with parameter ![]() , and assuming independence, then

, and assuming independence, then ![]() follows the binomial distribution with mean

follows the binomial distribution with mean ![]() and variance

and variance ![]() . Moreover, since the

. Moreover, since the ![]() ‘s are i.i.d. (independently and identically distributed), then by the Central Limit Theorem, for sufficiently large

‘s are i.i.d. (independently and identically distributed), then by the Central Limit Theorem, for sufficiently large ![]() ,

, ![]() is normally distributed. Hence:

is normally distributed. Hence:

![]()

Thus:

![]()

Similarly, we can derive the probability distribution for ![]() , which is given by:

, which is given by:

![]()

From the theory of probability, a well-known results states that the sum (or difference) of two normally-distributed random variable is normally-distributed. Thus the distribution of the difference in sample proportions ![]() is normally distributed. The mean of

is normally distributed. The mean of ![]() is given by

is given by ![]() . Moreover

. Moreover ![]() and

and ![]() are independent. This follows from the fact that the sample elements are independent. Thus we have

are independent. This follows from the fact that the sample elements are independent. Thus we have ![]() . The probability distribution of the difference in sample proportions is given by:

. The probability distribution of the difference in sample proportions is given by:

![]()

Case 1: We would like to find the confidence interval for the true difference in the two population proportions, that is,  .

.

Since: ![]() then:

then:

![]() .

.

The variance of ![]() is unknown as must be estimated in order to derive the confidence interval. We use

is unknown as must be estimated in order to derive the confidence interval. We use ![]() as an estimate for

as an estimate for ![]() and

and ![]() as an estimate for

as an estimate for ![]() . Thus we replace

. Thus we replace ![]() with

with ![]() and

and ![]() with

with ![]() in the standard deviation and obtain the following estimated standard error:

in the standard deviation and obtain the following estimated standard error:

![]()

The ![]() % confidence level for the difference in population proportions is given by:

% confidence level for the difference in population proportions is given by:

![]()

where ![]() is the stardardised score with a cumulative probability of

is the stardardised score with a cumulative probability of ![]() .

.

Case 2: Here we would like to test whether there is a significant difference between the population proportion.

This is hypothesis testing using the following null and alternative hypotheses:

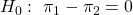

![]() :

: ![]()

![]() :

: ![]()

We know that:

![]()

and thus:

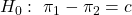

![]()

Let us consider the ![]() -statistics in the case of the null hypothesis, i.e., let us see what happens to the value of

-statistics in the case of the null hypothesis, i.e., let us see what happens to the value of ![]() when we assume that

when we assume that ![]() . First of all we replace the

. First of all we replace the ![]() in the numerator by 0. We need to replace the

in the numerator by 0. We need to replace the ![]() and the

and the ![]() in the denominator by estimates. We are assuming that

in the denominator by estimates. We are assuming that ![]() and

and ![]() are equal and so we just have to estimate one value. Every element in the sample, be it Sample 1 or Sample 2, has the same probability of being a success (since

are equal and so we just have to estimate one value. Every element in the sample, be it Sample 1 or Sample 2, has the same probability of being a success (since ![]() ). Hence

). Hence ![]() (or pi_2) is estimated by

(or pi_2) is estimated by ![]() , i.e., the number of successes in Sample 1 plus the number of successes in Sample 2, divided by the sample size. This is called the pooled sample proportion, because, since

, i.e., the number of successes in Sample 1 plus the number of successes in Sample 2, divided by the sample size. This is called the pooled sample proportion, because, since ![]() , we are combining Sample 1 with Sample 2, and thus we have just one pooled sample. So the

, we are combining Sample 1 with Sample 2, and thus we have just one pooled sample. So the ![]() -statistics becomes:

-statistics becomes:

where ![]() .

.

Hence the (estimated) standard error used for hypothesis testing of a significant difference in proportions is:

![]()

Case 3: Here we would like to test whether the difference between the population proportion deviates by a certain value.

This is hypothesis testing using the following null and alternative hypotheses:

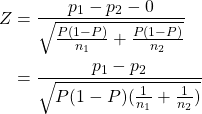

![]() :

: ![]()

![]() :

: ![]()

for some pre-defined real number ![]() .

.

We know that:

![]()

and thus:

![]()

Let us consider the ![]() -statistics in the case of the null hypothesis, i.e., let us see what happens to the value of

-statistics in the case of the null hypothesis, i.e., let us see what happens to the value of ![]() when we assume that

when we assume that ![]() . First of all we replace the

. First of all we replace the ![]() in the numerator by

in the numerator by ![]() . We need to replace the

. We need to replace the ![]() and the

and the ![]() in the denominator by estimates. We will replace

in the denominator by estimates. We will replace ![]() by

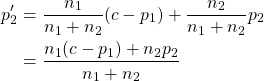

by ![]() . In Case 1, for the confidencce interval, we estimated

. In Case 1, for the confidencce interval, we estimated ![]() by the sample proportion

by the sample proportion ![]() . However here we are going to use the information that

. However here we are going to use the information that ![]() . Thus we are going to estimate

. Thus we are going to estimate ![]() by a weighted average of

by a weighted average of ![]() and

and ![]() as follows:

as follows:

The ![]() -statistic then becomes:

-statistic then becomes:

![]()

Hence the (estimated) standard error used for hypothesis testing of a difference in proportions by a certain value is:

![]()

where ![]() .

.