The Cramer Rao Lower Bound for The Geometric Distribution

Introduction

Suppose that we have a unbiased estimator of a parameter of a distribution. The Cramer-Rao inequality provides us with a lower bound for the variance of such an estimator. After we compute the Cramer-Rao Lower Bound (CRLB), we can check whether the unbiased estimator at hand reaches a minimum variance over all the unbiased estimators of the parameter.

The following is the Cramer-Rao Inequality.

Let ![]() be an unbiased estimator of

be an unbiased estimator of ![]() which is a parameter of the distribution with probability density function

which is a parameter of the distribution with probability density function ![]() and log-likelihood

and log-likelihood ![]() . Then the Cramer-Rao Inequality is given by:

. Then the Cramer-Rao Inequality is given by:

![]()

where the function ![]() known as Fisher Information is given by

known as Fisher Information is given by ![]() , and

, and ![]() is the sample size over which the estimator

is the sample size over which the estimator ![]() is estimated.

is estimated.

Thus the Cramer-Rao Lower Bound for the variance of ![]() is given by:

is given by: ![]() , that is,

, that is, ![]() .

.

In this article, we will derive the Cramer-Rao Lower Bound of an estimator of the parameter ![]() of the geometric distribution.

of the geometric distribution.

The Geometric Distribution

The probability density function of the geometric distribution (having parameter ![]() ) is given by:

) is given by:

![]()

Using standard probability theory, it follows that for the geometric distribution ![]() .

.

The Cramer-Rao Lower Bound for the Geometric Distribution

Consider first the Fisher Information for the Geometric Distribution with parameter ![]() .

.

![Rendered by QuickLaTeX.com \begin{equation*}\begin{align}I(p)&=-n\mathbb{E}\Big[\frac{\delta^2 l(x;p)}{\delta p^2}\Big]\\ &=-n\mathbb{E}\Big[\frac{\delta^2}{\delta p^2} [\ln ((1-p)^{x-1}p)]\Big]\\ &=-n\mathbb{E}\Big[\frac{\delta^2}{\delta p^2} [(x-1)\ln (1-p)+\ln p]\Big]\\ &=-n\mathbb{E}\Big[\frac{\delta}{\delta p} [-\frac{x-1}{1-p}+\frac{1}{p}]\Big]\\ &=n\mathbb{E}\Big[\frac{\delta}{\delta p} [\frac{x-1}{1-p}-\frac{1}{p}]\Big]\\ &=n\mathbb{E}\Big[\frac{x-1}{(1-p)^2}+\frac{1}{p^2}\Big]\\ &=n\Big(\frac{\mathbb{E}[x]-1}{(1-p)^2}+\frac{1}{p^2}\Big)\\ &=n\Big(\frac{\frac{1}{p}-1}{(1-p)^2}+\frac{1}{p^2}\Big)\text{ (since }\mathbb{E}[x]=\frac{1}{p})\\ &=n\Big(\frac{1-p}{p(1-p)^2}+\frac{1}{p^2}\Big)\\ &=n\Big(\frac{1}{p(1-p)}+\frac{1}{p^2}\Big)\\ &=n\Big(\frac{p+(1-p)}{p^2(1-p)} \Big)\\ &=\frac{n}{p^2(1-p)}. \end{align} \end{equation*}](https://datasciencegenie.com/wp-content/ql-cache/quicklatex.com-20f0a478ed24e56e84274add94ab11f8_l3.png)

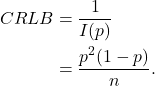

Thus, the Cramer-Rao Lower Bound for the variance of an estimator of ![]() of the geometric distribution is given by:

of the geometric distribution is given by: